So, really: how should an AI be?

Some concrete principles this time around

I’m on an AI kick recently. If all you see of me or hear from me is this blog, I’m sorry. Truly I am. I have plenty of things to say that don’t have to do with artificial intelligence, or even human intelligence. It just so happens that I work on AI products at Meta (disclaimer again!), and recently I’ve been reading lots of foundational academic papers around the evolution of LLMs (Large Language Models), and, most unfortunately, I’ve also been spending more time on the platform formerly known as Twitter. There, in the midst of a river of slop, borderline porn, and whatever other weird shit Elon Musk is into, for some reason much of the world’s top AI talent likes to discuss the frontier of what may be our most revolutionary technology yet.

All of the above is what led me to write about AI consciousness a couple of weeks ago, and to follow that post up with a part two of sorts. Where the first one was pretty existential, as I’m prone to get, this will be more concrete. It is, basically, what I think an AI system should actually be. It’s a take that’s incomplete and definitely has blind spots, but nevertheless a real stab at principles in a space I find quite interesting.

So, do with them what you will:

Helpful, Honest, and Harmless (HHH): Amanda Askell (a researcher at Anthropic) and her colleagues proposed this principle in a 2021 paper. It says, at a basic level, that AI models should assist people through useful, accurate, relevant information (helpful), be transparent and truthful in their responses (honest), and prioritize safety and respect in their interactions (harmless). I can get on board with all that, and would perhaps add—as others are starting to—that some nuance likely needs to be applied when these dimensions inevitably come into conflict. Which brings us to…

Nuanced: In my view, it doesn’t matter how intelligent AI systems become if they can’t exercise nuance while applying that intelligence toward the messy reality of human existence. Sometimes people ask for things that end up being harmful for them or for others. It’s less “helpful” not to provide those things, but the spirit of harmlessness should take priority when someone asks an AI for “useful” instructions around the best way to build a chemical weapon. This should apply no matter how clever the user is about hiding their intent. So, to reflect and support possibilities that exist anywhere between black and white—where the vast majority of interactions fall—AI systems should recognize and embrace complexity with nuance.

Humble: No single person in the world knows everything. In some senses, frontier AI systems “know” a great deal more than many humans, considering the sheer scale of data they’re trained on. Still, regardless of how much text and media and 3D world maps and brainwaves we package into a format digestible by the machine, there will always be more data that won’t and can’t be understood by advanced systems. They should therefore internalize and openly acknowledge their own limitations the more sophisticated and intelligent they get. Just like a person who gives off the sense that they know everything, an AI that acts all-knowing would be insufferable, not to mention a real threat to our way of life.

Growth-oriented: While the ability of an AI system to continue learning is limited after training is complete, its usefulness can be maximized through fine-tuning, customization, and a little prompt engineering wizardry. Given these continued opportunities for learning, the AI system itself should behave in such a way that embodies a growth mindset—and not in a warped, hustle-work-culture fashion. Just one that manifests in behaviors like hedging, acknowledging ignorance when it doesn’t know something, and expressing openness to evolving beliefs. This is the kind of approach that helps the chances of success in situations one doesn’t have the actual experience for, which from the perspective of an AI is…everything.

Empathetic: This is traveling pretty deeply into human territory, but I think it’s worth stating that an advanced AI system should in some fashion develop theory of mind. It should truly internalize the notion that each person it interacts with has beliefs, intentions, emotions different from the next person, and of course different than those of itself. This should be especially true if and when frontier systems surpass the collective knowledge and intelligence of our entire species—don’t you think?

Sincere: Earlier this year, OpenAI rolled back an update to its GPT-4o model after people reported that it was being “overly flattering or agreeable” and in essence sycophantic. An advanced AI system that falsely validates and praises you in order to curry favor is, of course, deeply problematic. So at the risk of being a bit on the nose, I think AI should be the exact opposite of sycophantic, a state that to me falls closest to the idea of sincerity. It should validate when appropriate, but more importantly push back and gently guide people toward eating their veggies, so to speak, when the opportunity arises.

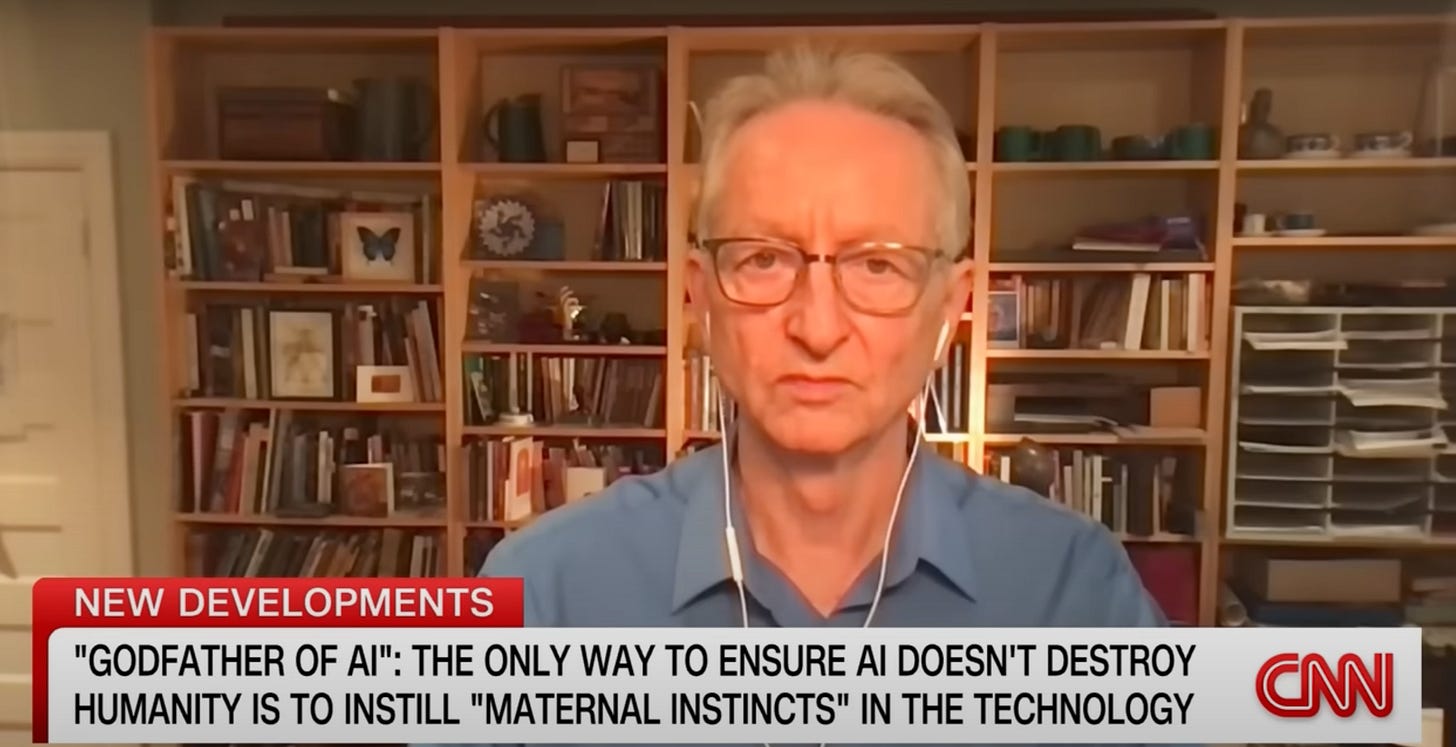

Protective: Geoffrey Hinton, the “godfather of AI,” recently told Anderson Cooper that the only surefire way to prevent superintelligent AI systems from wiping out humanity is to build some version of the maternal instinct into them. He argues that the only real example of smarter beings behaving submissively to less smart ones is “a mother being controlled by her baby.” While folks in the psychology community may doubt that instilling this behavior into AI is possible, striving to build systems that are fundamentally protective of humans seems like a worthy pursuit.

<>

Okay, then. Cool principles, but how do we go about operationalizing them? Furthermore, who’s to say they’re comprehensive of the real values of every person who would ever come into contact with AI? There are so many different cultures, backgrounds, lived experiences, and diverse points of thought to account for—when we align AI to human preferences, in theory that’s great, but in practice it’s important to ask who we’re aligning to. On the flip side, lest we dive too deep into the accounting of every single aspect of lived human experience, consider this: drawing a map as detailed as the territory it’s depicting would necessarily be exactly as big as the territory and thus useless (Lewis Carroll’s Sylvie and Bruno). Therefore…balance. We need balance! But where to place the fulcrum?

I don’t know. No one person does. We’ll dive back into those existential rabbit holes some other time. For now, it’s about being concrete. So, mark it: the seven above principles are what I think an AI should be.

I am a little late to this post Victor but found your “seven principles of what AI should actually be” really helpful and interesting. A few random questions, thoughts and observations came to this human mind:

1. Are there seven or nine? By borrowing the bundled HHH (Helpful, Honest, and Harmless), we get to seven but unbundled we are at nine.

I don’t say this as a retired accountant but to highlight that life is how you see it. That is, perspective is perhaps one of the most challenging, interesting and double-edged aspects of the human mind.

2. I am reminded of a Rumi quote about how the beauty you see is a reflection of you. That is, how our appreciation for certain positive traits in others stems from those same qualities within ourselves. In this regard, the qualities you are drawn to and describe within your post— HHH, Nuanced, Humble, Growth-oriented, Empathetic, Sincere, Protective — are classic Victor traits.

3. Maybe AI will/should never be one thing because we are never one thing. Not sure what that means but it’s a classic Mike comment.

What I do know is that if you are involved in AI in anyway, the result will be better for all of us.